Premier Strategies for Enhancing Arm Movement Efficiency

Advanced Techniques for Enhancing Arm Power and Precision

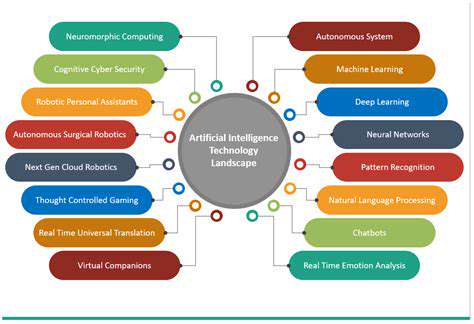

Optimizing Performance with Algorithmic Refinements

Advanced techniques in algorithm design and implementation are crucial for enhancing performance in various applications. These techniques focus on minimizing computational complexity and maximizing resource utilization. By employing optimized algorithms, developers can significantly reduce execution time and improve overall system responsiveness. This often involves carefully analyzing the problem space, identifying bottlenecks, and selecting the most efficient algorithm for the task at hand. For example, switching from a brute-force approach to a divide-and-conquer strategy can dramatically decrease processing time, especially for large datasets. This optimization is essential for creating scalable and robust applications.

Furthermore, understanding and applying data structures that align with the specific requirements of the task is critical. Choosing the right data structure can substantially affect the efficiency of algorithms. Linked lists, trees, graphs, and hash tables each offer unique advantages and disadvantages in terms of memory usage, search speed, and insertion/deletion times. A thoughtful selection of data structures, coupled with optimized algorithms, can unlock significant performance gains in applications handling massive amounts of data. Careful consideration of these factors is essential for building high-performing systems.

Leveraging Parallel Processing Strategies

Modern computing environments often benefit from leveraging parallel processing strategies to expedite tasks. By breaking down complex problems into smaller, independent sub-problems, and distributing these sub-problems across multiple processors or cores, significant performance gains can be achieved. This approach is particularly effective for computationally intensive operations, such as image processing, scientific simulations, and large-scale data analysis. Parallel processing can drastically reduce the time required to complete a task, especially when dealing with enormous datasets or complex calculations. Parallel programming paradigms, such as OpenMP and MPI, allow developers to effectively manage and coordinate the execution of tasks across multiple threads or processors.

Implementing parallel processing effectively requires careful consideration of data dependencies and potential race conditions. Careful synchronization mechanisms are necessary to avoid data corruption or inconsistencies. Furthermore, the overhead associated with task partitioning and communication between processors must be minimized to maximize the benefits of parallelism. Properly managing these complexities is essential for achieving optimal performance gains from parallel processing strategies.

Employing Advanced Data Structures and Techniques

Utilizing advanced data structures beyond basic arrays and linked lists can significantly enhance the performance of algorithms. Implementing specialized data structures, such as balanced search trees (e.g., red-black trees) or specialized graph representations, can dramatically improve query times and overall efficiency. These structures offer optimized performance characteristics for specific operations like searching, sorting, and insertion/deletion within large datasets. Employing these specialized structures often requires a deeper understanding of the underlying data and algorithms, and the specific needs of the application. This understanding is essential to ensure the proper choice of data structures for the task at hand.

Techniques like caching and memoization can also significantly improve performance. Caching involves storing frequently accessed data in a readily available location, reducing the need for repeated lookups from slower storage. Memoization stores the results of expensive function calls, preventing redundant computations. These techniques can lead to substantial speedups, especially in recursive algorithms or those with repeated subproblems. This further underscores the importance of understanding the characteristics of the data and the algorithms used to ensure optimal performance.